Supervising Cloud Infrastructure is an important aspect of Cloud administration and Azure SQL Databases are no exception. This is something we are continuously improving at my company.

On-prem, DBAs often rely on well-established products but with Cloud-based architectures, often implemented through DevOps projects and developers, monitoring should be been redefined and include some new topics as:

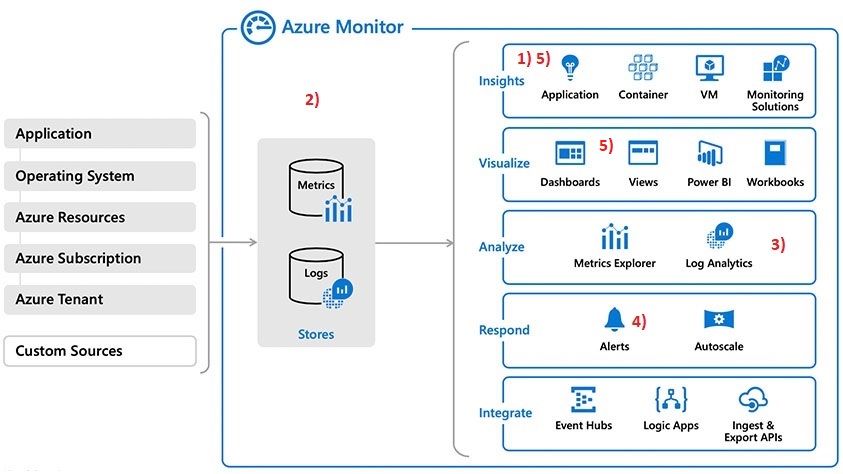

1) Cloud service usage and fees observability

2) Metrics and events detection that could affect bottom line

3) Implementing a single platform to report all data that comes from different sources

4) Trigger rules with data if workload reaches over or drops below certain levels or when an event is enough relevant to not meet the configuration standard and implies unwanted extra billing or when it compromises the company security rules.

5) Monitoring of the user experience

A key benefit often discussed about Cloud computing, and mainly driven by DevOps, is how it enables agility. One of the meaning of term agility is tied to the rapid provisioning of computer resources (in seconds or minutes) and this shortening provisioning path enables work to start quickly. You may be tempted to grant some provisioning permissions to DEV teams and from my opinion this is not a bad thing, but it may come with some drawbacks if not under control by Ops team including database area. Indeed, for example I have in mind some real cases including architecture configuration drift, security breaches created by unwanted item changes, or idle orphan resources for which you keep being charged. All of these scenarios may lead either to security issues or extra billing and I believe it is important to get clear visibility of such events.

In my company, Azure built-in capabilities with Azure Monitor architecture are our first target (at least in a first stage) and seem to address the aforementioned topics. To set the context, we already relied on Azure Monitor infrastructure for different things including Query Performance Insight, SQL Audit analysis through Log Analytics and Azure alerts for some performance metrics. Therefore, it was the obvious way to go further by adding activity log events to the story.

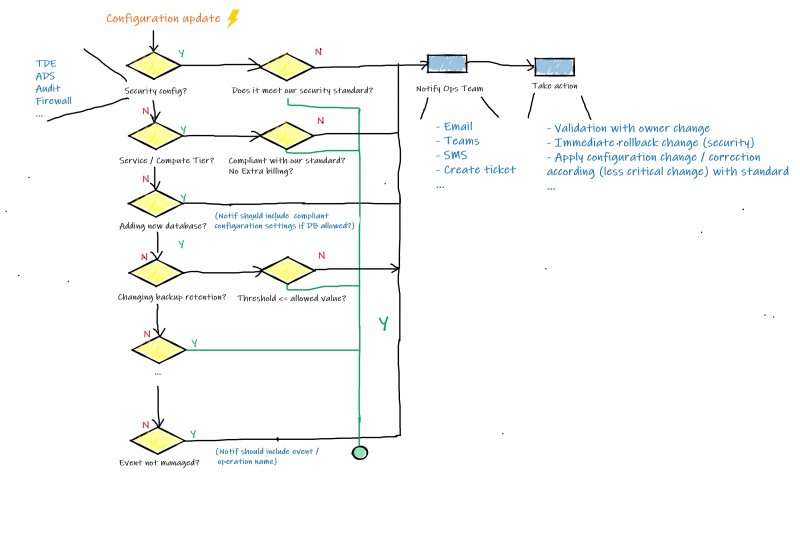

In this blog post, let’s focus on the items 2) 4). I would like to share some experimentations and thoughts about them. As a reminder, items 2) 4) are about catching relevant events to help identifying configuration and security drifts and performing actions accordingly. In addition, as many event-based architectures, additional events may appear or evolve over the time and we started thinking about the concept with the following basic diagram …

… that led to the creation of the two following workflows:

– Workflow 1: To get notified immediately for critical events that may compromise security or lead immediately to important extra billing

– Workflow 2: To get a report of other misconfigured items (including critical ones) on schedule basis but don’t require quick responsiveness of Ops team.

Concerning the first workflow, using alerts on activity logs, action groups and webhooks as input of an Azure automation runbook appeared to be a good solution. On another side, the second one only requires running an Azure automation workbook on schedule basis. In fact, this is the same runbook but with different input parameters according to the targeted environment (e.g. PROD / ACC / INT). In addition, the runbook should be able to identity unmanaged events and notified Ops team who will decide either to skip it or to integrate it to runbook processing.

Azure alerts which can be divided in different categories including metric, log alerts and activity log alerts. The last one drew our attention because it allows getting notified for operation of specific resources by email or by generating JSON schema reusable from Azure Automation runbook. Focusing on the latter, we had come up (I believe) with what we thought was a reasonable solution.

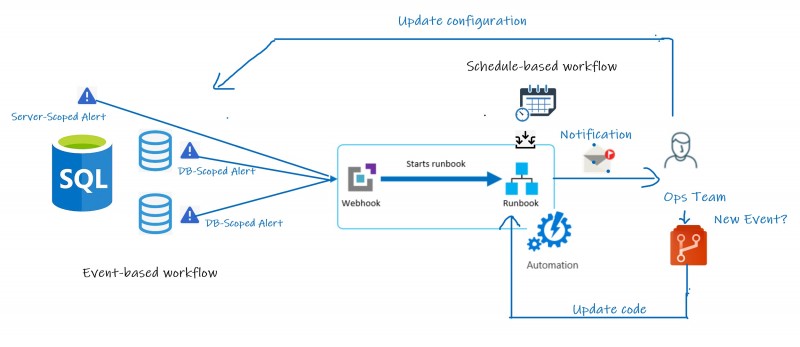

Here the high-level picture of the architecture we have implemented:

1- During the creation of an Azure SQL Server or a database, corresponding alerts are added with Administrative category with a specific scope. Let’s precise that concerned operations must be registered with Azure Resource Manager in order to be used in Activity Log and fortunately they are all including in the Microsoft.Sql resource provider in this case.

2- When an event occurs on the targeted environment, an alert is triggered as well as the concerned runbook.

3- The execution of the same runbook but with different input parameters is scheduled on weekly basis to a general configuration report of our Azure SQL environments.

4- According the event, Ops team gets notified and acts (either to update misconfigured item, or to delete the unauthorized item, or to update runbook code on Git Repo to handle the new event and so on …)

The skeleton of the Azure automation runbook is pretty similar to the following one:

param

(

[Parameter (Mandatory=$false)]

[object] $WebhookData

,

[parameter(Mandatory=$False)]

[ValidateSet("PROD","ACC","INT")]

[String]$EnvTarget

,

[parameter(Mandatory=$False)]

[Boolean]$DebugMode = $False

)

If ($WebhookData)

{

# Logic to allow for testing in test pane

If (-Not $WebhookData.RequestBody){

$WebhookData = (ConvertFrom-Json -InputObject $WebhookData)

}

$WebhookBody = (ConvertFrom-Json -InputObject $WebhookData.RequestBody)

$schemaId = $WebhookBody.schemaId

If ($schemaId -eq "azureMonitorCommonAlertSchema") {

# This is the common Metric Alert schema (released March 2019)

$Essentials = [object] ($WebhookBody.data).essentials

# Get the first target only as this script doesn't handle multiple

$status = $Essentials.monitorCondition

# Focus only on succeeded or Fired Events

If ($status -eq "Succeeded" -Or $Status -eq "Fired")

{

# Extract info from webook

$alertTargetIdArray = (($Essentials.alertTargetIds)[0]).Split("/")

$SubId = ($alertTargetIdArray)[2]

$ResourceGroupName = ($alertTargetIdArray)[4]

$ResourceType = ($alertTargetIdArray)[6] + "/" + ($alertTargetIdArray)[7]

# Determine code path depending on the resourceType

if ($ResourceType -eq "microsoft.sql/servers")

{

# DEBUG

Write-Output "This is a SQL Server Resource."

$firedDate = $Essentials.firedDateTime

$AlertContext = [object] ($WebhookBody.data).alertContext

$channel = $AlertContext.channels

$EventSource = $AlertContext.eventSource

$Level = $AlertContext.level

$Operation = $AlertContext.operationName

$Properties = [object] ($WebhookBody.data).alertContext.properties

$EventName = $Properties.eventName

$EventStatus = $Properties.status

$Description = $Properties.description_scrubbed

$Caller = $Properties.caller

$IPAddress = $Properties.ipAddress

$ResourceName = ($alertTargetIdArray)[8]

$DatabaseName = ($alertTargetIdArray)[10]

$Operation_detail = $Operation.Split('/')

# Check firewall rules

If ($EventName -eq 'OverwriteFirewallRules'){

Write-Output "Firewall Overwrite is detected ..."

# Code to handle firewall update event

}

# Update DB => No need to be monitored in real time

Elseif ($EventName -eq 'UpdateDatabase') {

# Code to handle Database config update event or skip

}

# Create DB

Elseif ($EventName -eq 'CreateDatabase' -Or `

$Operation -eq 'Microsoft.Sql/servers/databases/write'){

Write-Output "Azure Database creation has been detected ..."

# Code to handle Database creation event or skip

}

# Delete DB

Elseif ($EventName -eq 'DeleteDatabase' -Or `

$Operation -eq 'Microsoft.Sql/servers/databases/delete') {

Write-Output "Azure Database has been deleted ..."

# Code to handle Database deletion event or skip

}

Elseif ($Operation -eq 'Microsoft.Sql/servers/databases/transparentDataEncryption/write') {

Write-Output "Azure Database Encryption update has been detected ..."

# Code to handle Database encryption update event or skip

}

Elseif ($Operation -eq 'Microsoft.Sql/servers/databases/auditingSettings/write') {

Write-Output "Azure Database Audit update has been detected ..."

# Code to handle Database audit update event or skip

}

Elseif ($Operation -eq 'Microsoft.Sql/servers/databases/securityAlertPolicies/write' -or $Operation -eq 'Microsoft.Sql/servers/databases/vulnerabilityAssessments/write') {

Write-Output "Azure ADS update has been detected ..."

# Code to handle ADS update event or skip

}

ElseIf ($Operation -eq 'Microsoft.Sql/servers/databases/backupShortTermRetentionPolicies/write'){

Write-Output "Azure Retention Backup has been modified ..."

# Code to handle Database retention backup update event or skip

}

# ... other ones

Else {

Write-Output "Event not managed yet "

}

else {

# ResourceType not supported

Write-Error "$ResourceType is not a supported resource type for this runbook."

}

}

}

Else {

# The alert status was not 'Activated' or 'Fired' so no action taken

Write-Verbose ("No action taken. Alert status: " + $status) -Verbose

}

}

Else{

# SchemaID doesn't correspond to azureMonitorCommonAlertSchema =>> Skip

Write-Host "Skip ..."

}

}

Else {

Write-Output "No Webhook detected ... switch to normal mode ..."

If ([String]::IsNullOrEmpty($EnvTarget)){

Write-Error '$EnvTarget is mandatory in normal mode'

}

#########################################################

# Code for a complete check of Azure SQL DB environment #

#########################################################

}

Some comments about the PowerShell script:

1) Input parameters should include either the Webhook data or specific parameter values for a complete Azure SQL DB check.

2) The first section should include your own functions to respond to different events. In our context, currently we drew on DBAChecks thinking to develop a derived model but why not using directly DBAChecks in a near future?

3) When an event is triggered, a JSON schema is generated and provides insight. The point here is you must navigate through different properties according to the operation type (cf. BOL).

4) The increase of events to manage could be a potential issue making the runbook fat especially if we keep both the core functions and event processing. To mitigate this topic, we are thinking to move functions into modules in Azure automation (next step).

Bottom line

Thanks to Azure built-in capabilities we improved our visibility of events that occur on the Azure SQL environment (both expected and unexcepted) and we’re now able to act accordingly. But I should tell you that going this way is not a free lunch and we achieved a reasonable solution after some programming and testing efforts. If you can invest time, it is probably the kind of solution you can add to your study.

See you